Scientists root the concept of dimensions in the idea of describing space. However, the scientific sense of space as an “extended” void with dimensions did not arise until well into the 17th century; not until Galileo and Descartes made space the cornerstone of modern physics as something physical with a geometry. By the end of the 17th century, Isaac Newton had expanded the vision to encompass the entire universe, which now became a potentially infinite three-dimensional vacuum.

Interestingly, it was the work of artists several hundred years earlier that foresaw and likely undergirded this scientific breakthrough. In the 14th to 16th centuries, Giotto, Paolo Uccello and Piero della Francesca developed the techniques of what came to be known as perspective—a style originally termed “geometric figuring.” By exploring geometric principles, these painters gradually learned how to draw images of objects in three-dimensional space. By doing so, they reprogrammed European minds to see space in a Euclidean fashion.

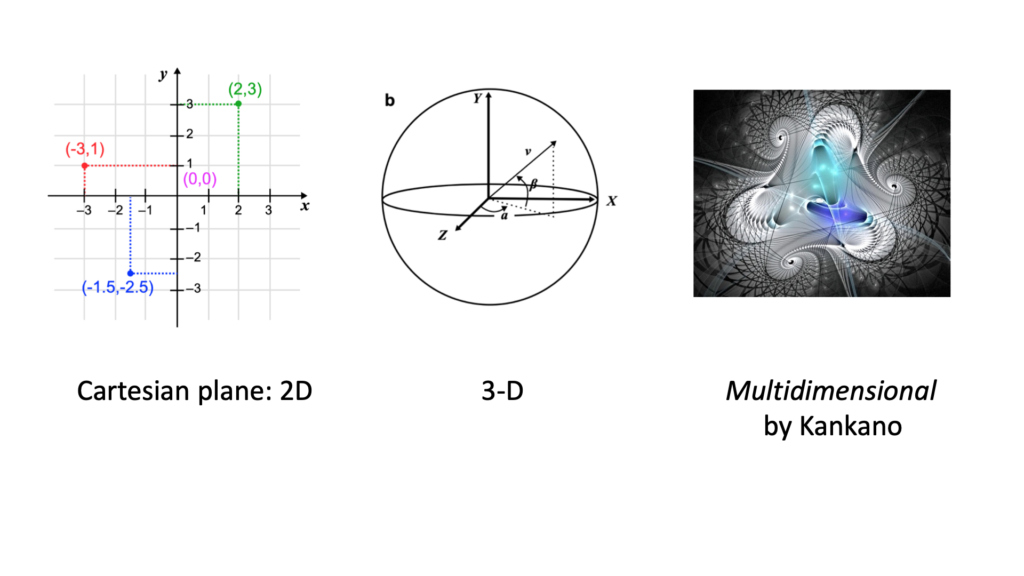

Descartes’ contribution was to make images of mathematical relations and formalize the concept of a “dimension.” He did it in terms of a rectangular grid marked with an x and y axis, and a coordinate system. The Cartesian plane is a two-dimensional space because we need only two coordinates to identify any point within it. With this framework, Descartes linked geometric shapes and equations. Thus, we can describe a circle with a radius of 1 by the equation x2 + y2 =1. Later, this framework became the basis for the calculus developed by Isaac Newton and G W Leibniz.

How does this concept of space affect our lived experience? Imagine living in a Cartesian two-dimensional world in which you are only aware of length and width. You see two objects approach each other at tremendous speed in this flat-plane world. The inevitable outcome will be a crash, you think. When nothing happens and the two objects appear to pass through each other untouched and continue on the other side, you think—it’s a miracle!

The Cartesian plane makes it easy to imagine adding another axis (x, y, z), which now allows us the ability to describe the surface of a sphere (x2 + y2 + z2 = 1) and thus describe forms in three-dimensional space.

Imagine that you are now seeing the same two objects coming towards each other, but able to see them in three dimensions, not only length and width, but also depth. You see the two objects, which are planes, approach each other at tremendous speed. But because you can see that one is significantly above the other, you know they are safe. It is patently obvious and definitely not a miracle there is no crash. All because you can see into this third dimension.

In 1905, an unknown physicist named Albert Einstein published a paper describing the real world as a four-dimensional setting. In his “special theory of relativity,” Einstein added time to the three classical dimensions of space. Scientists mathematically accommodated this new idea easily since all one has to do is add a new “time” coordinate axis within the Cartesian framework.

Events in our world seem to occur in four dimensions (length, width, depth, and time) and we can see into all of them. Hence, when the same two objects, which we recognize as planes, approach each other at tremendous speed and at the same height, there would be a crash, except the two planes are flying in the same space but at different times. Again, it is obvious there is no crash because of our ability to perceive differences in time. This ability, to see in four dimensions, makes interactions between objects appear natural and obvious, whereas for those seeing in fewer dimensions, they would seem miraculous or paradoxical.

Einstein’s Theory of General Relativity says we live in four dimensions. String Theory, developed in the 1960s, in contrast, says it’s at least 10. In 1919, Theodor Kaluza discovered that adding a fifth dimension to Einstein’s equations could account for the interactions of both electromagnetism and gravity, the fundamental forces that govern how objects or particles interact. The problem was that, unlike the previous four, this fifth dimension did not relate directly to our sensory experience. It was just there in the mathematics. More recently, String Theory scientists have shown that an additional five dimensions account for weak and strong nuclear forces, the two additional fundamental forces of nature. Thus, with 10 dimensions, String Theory can account for ALL the fundamental forces and ALL their interactions. Unfortunately, we have no way of relating our lived experience to this mathematical multidimensional accountability.

We look out at our complex world and try to figure out why accidents happen? Why do young people die? What makes voters attracted to certain ideas? Why do we fall in love with this person and not that one? Why does only one person survive a plane crash? From our human perspective, these are difficult to answer and even incomprehensible questions because we don’t have full insight into the dimensions at work creating the dynamics of these interactions. If we did, it would be as obvious as the sun rising every morning.

Today we know physical interactions result from information exchanged by fundamental particles known as bosons, and mathematically accounted for in 4-D space by Einstein’s equations. Experiments with bosons, however, suggest there may be forms of matter and energy vital to the cosmos that are not yet known to science. There may, in fact, be additional fundamental forces of nature and distinct dimensions of information.

Indeed, different exchanges of information mediate human interactions. These involve intangible dimensions of unity, beauty, sociality, persistence, love, entanglement, etc. Information in these dimensions affects social interactions and relates more directly to our life. Imagine, for example, two individuals running at each other at full speed and crashing. Without contextual information, how do you understand the action? Is it two angry opponents on a battlefield trying to kill one another? Or, is it two players on opposite teams tackling one another in a game of American football? Knowing the intangible dimensions of the interaction and the information provided gives us an obvious answer.

Preliminary attempts by psychologist Sarah Hoppler and her colleagues (2022) give us hope that a combination of factors can describe social encounters. These researchers have identified six dimensions (actor, partner, relation, activities, context, and evaluation) with three levels of abstraction, based on how people describe their social interactions. They have shown their approach to depict and account for all conceivable sorts of situations in social interactions, irrespective of whether described abstractly or in great detail.

It might be useful, therefore, to ask whether there are limits to our comprehension of additional dimensions of information? Science tells us that physical dimensions may be so tiny and fleeting that we currently can’t detect them. However, in terms of intangible sources of the human experience, we may not be as helpless. We can sense and intentionally improve some of these dimensions (love, sociality, persistence); others we sense and learn to appreciate (beauty, unity); but a vast majority are, for now, still beyond our comprehension (entanglement).

We are still discovering, evolving, and quantifying the full capacity of our human experience. If we accept Pierre Teilhard de Chardin’s statement that “We are not human beings having a spiritual experience; we are spiritual beings having a human experience,” then the voyage of discovery of our multidimensional nature is unlimited and ought to be infinitely enjoyable.